There has been a paradigm shift in the way we inform ourselves. Eight of ten people in the developed world using the internet. That’s more than most elections have at the ballot. About every fourth internet user is predominantly visiting social networks to use them as an information source. And people still use search engines. While Microsoft’s Bing has an estimated user base of 200 Million searches in 24 hours, Google gets hit with about 3.5 billion search the same day. There are one billion websites available today, with another 5 new ones published each second.

Should we be concerned about the information available to us? With the rise of the internet, information is right at our fingertips – regardless of its quality. Search algorithms are ultimately affecting what information we consume. The input variables for these algorithms are more than just the text entered into a search field. Your location, the type of computer you use and data that has been collected about you over time (e.g. browser cookies) creating a behavioural pattern which makes you, the user, affecting the search results. This observer-effect is known from quantum physics, yet implemented by humans as procedural behaviour into source code.

This principle has been adapted since the early days of search engines. In the original documents of the Stanford University of California, two students described how to create a search engine that delivers results as “an objective measure of its citation importance that corresponds well with people’s subjective idea of importance”. “People’s subjective idea of importance” is page-ranking by the number of observers, i.e. the attention a particular information receives. That is Google’s initial white paper from 1997. The search-rank of a webpage is determined fundamentally by the back-links from other webpages; frankly by the attention a site is already getting on the internet. What seems like a catch-twenty-two for „newborn“ websites, is yet a fundamental algorithm (amongst others) that influence our perception of the world.

Algorithms are developed by humans, hence they have a certain result in mind as an outcome. Since modern developments are driven by economic means, the economic aim for profit is one constant in algorithms. Data collection brings developers and machine-learning-algorithms insights to work with and adapt search algorithms. One major issue in current search engines like Google is that they rely on “honest attribution“ which means, the ranking of a web document does not pay attention to the quality of information it returns.

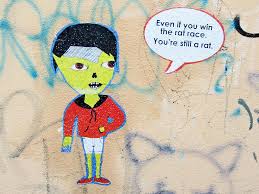

The ranking of information becomes a popularity contest: Internet users vote up information trough attention. Authenticity is up for each user to decide.

Humans tend to believe information from a popular source to a further extend than from those they don’t perceive as such. This can be categorised within the phenomenon of the bandwagon effect, which describes human inclination to follow the herd. Nine out of ten people pick only from the first page of results which Google offers on a search term. The herd becomes defined by the position of the information itself. The internet user ultimately determines its own truth.

The economic principle of growth has become a major force of human motivation in modern history. Information has become a carrier and source of business opportunity. Online business with fake likes and fake comments have unrestrainedly taken off. Any frame of meaning can be reduced to commercial intention. People can buy likes and comments on social networks to create the impression that they have an abundance of followers which results in more interaction. Real people, “trolls”, are paid to spread misinformation while bots, automated software, hitting social profiles to generate content that is indistinct-able from content which is generated by real humans. Digital followers are assets, traffic is the turnover and real-world influence is the revenue.

Popularity generates popularity. There is not much time to overthink and correct information before they become seemingly true as per popular agreement. More than half of Australian internet users say they can trust “most of the news that [they] use most of the time”.

The Omiscience Bias

The internet user’s contribution to the echo chamber of social popularity is not just clicking the links on the first search results page. Social media has come to the rescue and is giving people a digital voice. We can share information easily. And we do. Nearly a million flicks of data are hitting facebook’s servers per minute and most are consumed by humans. In 2015 Facebook overtook Google as the main source for information. Only 10% of content gets shared but 80% of it gets liked, which can be consumed by the network of friends a user has, spreading exponentially.

Internet users source and relay. On social media like Twitter people are able to tailor their information stream. On Facebook, users can determine their news stream. Ultimately the user filters information by relaying it or not. This affects the way people reflect on the world and ultimately it affects their actions. But in what way?

There is an observable bias in people’s attitudes that developed with the use of the internet, called the “omniscience bias”. Google will give you insights on results page one, coming up with the British “New Statesman” magazine: “Omniscience bias: how the internet makes us think we already know everything”. Psychologists have replicated this effect in different ways and published the results in the “Journal of experimental psychology”: We tend to be over-confident that we have the right information we need to form opinions and make judgements. The modern internet feeds this tendency by persuading one into the belief that everything one needs to know is a click away or coming soon from a feed nearby. People flick through google-searches or social media timelines using external knowledge, becoming victim of the illusion that this knowledge is their own. We start to “mistake access to information for our own personal understanding of the information”.

On social media, where information is spreading most actively, the omniscience bias melts thoughts together by aligning them through popularity. This feedback loop becomes the famous echo chamber each internet user is enclosed in. As Facebook and Twitter have overtaken Google in terms of popularity for news, it affects Google’s (and Bing’s) search algorithm as well. The web-blog “search engine land” investigated if socially generated content (“social signals”) affect the ranking of information in search engines. Google plainly answered “yes”, while Microsoft added “We do look at the social authority of a user”. Who does? Human beings at a corporate entity or machine-run algorithms? Neither option seems pleasant.

Combined with social signals of shares and likes, Google & Bing display the ultimate selection of what people want to know about, not need to know about. And who’s to determine what people need to know about?

The Uncertainty Effect

In the 1960s a series of experiments were conducted, suggesting people’s bias towards confirming their existing beliefs when it comes to information processing. It has been labelled “Confirmation Bias”. Warren Buffett nails the issue: “What the human being is best at doing is interpreting all new information so that their prior conclusions remain intact.” – It is, in fact, that the human brain simply cannot operate without a collection of experiences. And these experiences define the framework and context we are thinking in. Something that the philosopher Immanual Kant stated over two centuries ago. The internet enabled us to dig out information that we ultimately incorporate as own experiences. But instead of critically reflecting on challenging ideas or facts, people tend to collect fragments of information that fortify own beliefs instead of challenging them. Inserting fragments of information into a personal world-view requires a contextualisation which a search engine result or brief article on something does not provide. Hence a consumer of information has to bring information into context with the individual means one has.

The magazine Scientific American published a research-article where they ”examined a slippery way by which people get away from facts that contradict their beliefs”. The issue of same-sex marriage was presented to people “who supported or opposed same-sex marriage with (supposed) scientific facts that supported or disputed their position. When the facts opposed their views, [their] participants — on both sides of the issue— were more likely to state that same-sex marriage isn’t actually about facts, it’s more a question of moral opinion. But, when the facts were on their side, they more often stated that their opinions were fact-based and much less about morals.”

A challenge forces the brain figuratively to leave the comfort zone and enter a terrain of uncertainty. As a result it creates a gap of information to support one’s view on an issue and leaves gaps in the brain’s structure of knowledge. This “uncertainty effect” has been scientifically evaluated in 2006 by the Boston MIT and led to disturbing conclusions: The brain replaces missing information with an inexplicable fright, an “irrational by-product of not knowing – that keeps us from focusing on the possibility of future rewards”. Frankly: People want to know what they are already inclined to believe.

With the internet enabling people to submerge those islands of fear quickly, it might even have a detrimental effect on our sanity. The brain makes hypotheses about how the world should be and matches it with its sensory input. Are the hypotheses affirmed, cognition happens after very short processing times. If they are a no match, the brain needs to correct its hypotheses the processing times are prolonged. In most cases, so Singer, the act of cognition confines to the affirmation of already formulated hypotheses.

Today we have developed a dependency on technology, which seems to have eclipsed our reliance on logic, critical thinking and common sense by evading the burden of critical reflection. In other words: The internet causes superstition to be spread.

As long was the uncertainty effect is an observable fact of our brain function, as long there will be bias. The internet is collecting and reflecting our human attitudes that have the power to quickly create attitude polarisation with its manifestation in the real world. For what it’s worth, it will open up deeper insights into social issues.

As internet facts don’t always bear the truth – or are at the least biased by selection, incorporating misinformation into one’s own world view has lead to an era some people describe “post factual”. The internet itself is not the reason and source for this diminution of mind but an overrated amplifier and catalyst. Finding valid information, i.e. the truth, is a personal challenge and responsibility. The spreading of information has never been so simple where the consumption of true facts has never been so hard. While humans behave to their nature, the internet is learning how that nature looks like. The web consists of the people who feed it information and big data is capable of shrinking that society’s reality to the essence of information. This bears the chance for social evolution if we learn from it. For starters, the internet is beginning to make fundamental behavioural patterns of our brains visble. From a social perspective it shows the division of critical thinking people and plain, frightened followers in the real world.

Dieser Post ist auch verfügbar auf: German